Last night, Apple made a huge announcement that it’ll be scanning iPhones in the US for Child Sexual Abuse Material (CSAM). As a part of this initiative, the company is partnering with the government and making changes to iCloud, iMessage, Siri, and Search.

However, security experts are worried about surveillance and the risks of data leaks. Before taking a look at those concerns, let’s understand, what is Apple doing exactly?

How does Apple plan to scan iPhones for CSAM images?

A large set of features for CSAM scanning relies on fingerprinted images provided by the National Center for Missing and Exploited Children (NCMEC).

Apple will scan your iCloud photos and watch them with the NCMEC database to detect if there are any CSAM images. Now, the company is not really doing this on the cloud, but it’s performing these actions on your device. It says that before an image is sent to the iCloud storage, the algorithm will perform a check against known CSAM hashes.

When a photo is uploaded to iCloud, Apple creates a cryptographic safety voucher that’s stored with it. The voucher contains detail to determine if the image matches against known CSAM hashes. Now, the tech giant won’t know about these details, unless the number of CSAM images on your iCloud account goes beyond a certain amount.

Keep in mind, that if you have iCloud sync off on your phone, the scanning won’t work.

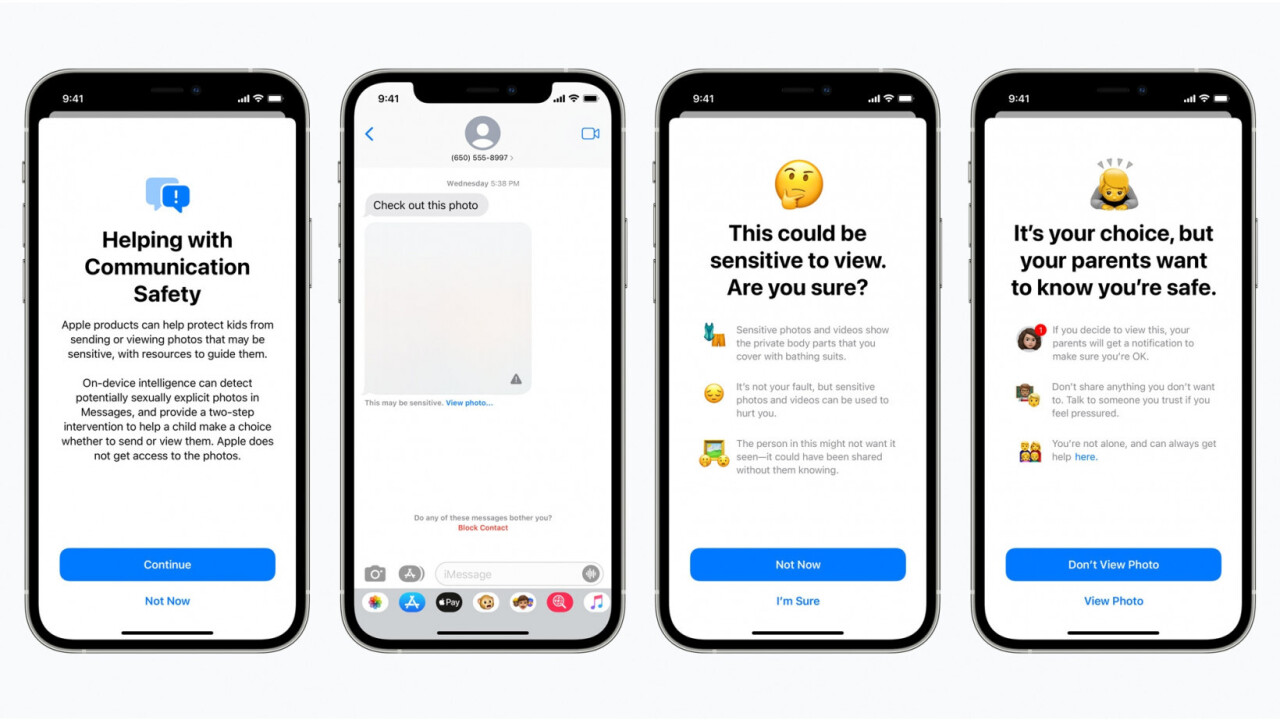

For iMessage, Apple will perform a scan and blur CSAM images. Plus, when a child views such an image, parents will receive a notification about it, so they can take appropriate action.

If a child is trying to send such an image, they’ll be warned, and if they go ahead, a notification will be sent to parents.

It’s important to note that parental notification will only be sent if the child is under 13. Teens aged 13-17, will only get a warning notification on their own phones.

The company is also tweaking Siri and Search to provide additional CSAM-related resources for parents and children. Plus, if someone is performing CSAM related searches, Siri can intervene and give them a warning about the content.

What are experts worried about?

All of Apple’s features sound like they’re meant to help stop CSAM, and it looks like a good thing on paper.

However, digital rights organization Electronic Frontier Foundation (EFF) criticized Apple in a post and noted that the company’s implementation opens up potential backdoors in an otherwise robust encryption system.

“To say that we are disappointed by Apple’s plans is an understatement,” EFF added. The organization pointed out that scanning for content using a pre-defined database could lead to dangerous use cases. For instance, in a country where homosexuality is a crime, the government “might require the classifier to be trained to restrict apparent LGBTQ+ content.”

Edward Snowden argued that Apple is rolling out a mass surveillance tool, and they can scan for anything on your phone tomorrow.

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

They turned a trillion dollars of devices into iNarcs—*without asking.* https://t.co/wIMWijIjJk

— Edward Snowden (@Snowden) August 6, 2021

Matthew Green, a security professor at Johns Hopkins University, said that people who are controlling the list of images that are matched for scanning have too much power. Governments across the world have been asking various companies to provide backdoors to encrypted content. Green noted that Apple’s step “will break the dam — governments will demand it from everyone.”

The theory is that you will trust Apple to only include really bad images. Say, images curated by the National Center for Missing and Exploited Children (NCMEC). You’d better trust them, because trust is all you have.

— Matthew Green (@matthew_d_green) August 5, 2021

If you want to read more about why people should be asking more questions, researcher David Theil has a thread on sexting detection. Plus, former Facebook CSO Alex Stamos, Stanford researcher Riana Pfefferkorn, along with Theil and Green has an hour-long discussion on Youtube on the topic.

Apple’s features for CSAM are coming later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey. However, it’ll be in the company’s interest to address various concerns put forward by security researchers and legal experts.

The company has rallied around its strong stance on privacy for years now. Now that the firm is under pressure for taking an allegedly anti-privacy step, it needs to hold discussions with experts and mend this system. Plus, Apple will have to be transparent about how these systems have performed.

We have had numerous examples of tech like facial recognition going wrong and innocent people being accused of crimes they never committed. We don’t need more of that.

Get the TNW newsletter

Get the most important tech news in your inbox each week.